This page summarising some techniques for automating admin, especially node management.

NMIS Cluster Node Admin

When using an opHA NMIS cluster most node admin is performed on the "Main Primary" server, more details about that @ opHA 3 Redundant Node Polling and Centralised Node Management

Get opConfig working on all my nodes

The opConfig application runs on the servers which talk to the end devices, you don't need to install opConfig on your primary server to have it function. Some aspects of the node admin for opConfig can be done from the primary server, then sync'd to the pollers.

Use the opAdmin GUI to bulk edit nodes

The opConfig User Manual includes details on this: opConfig 4 User Manual, if you are using an opHA cluster, you will need to activate the nodes on the primary and then do the discovery on the poller.

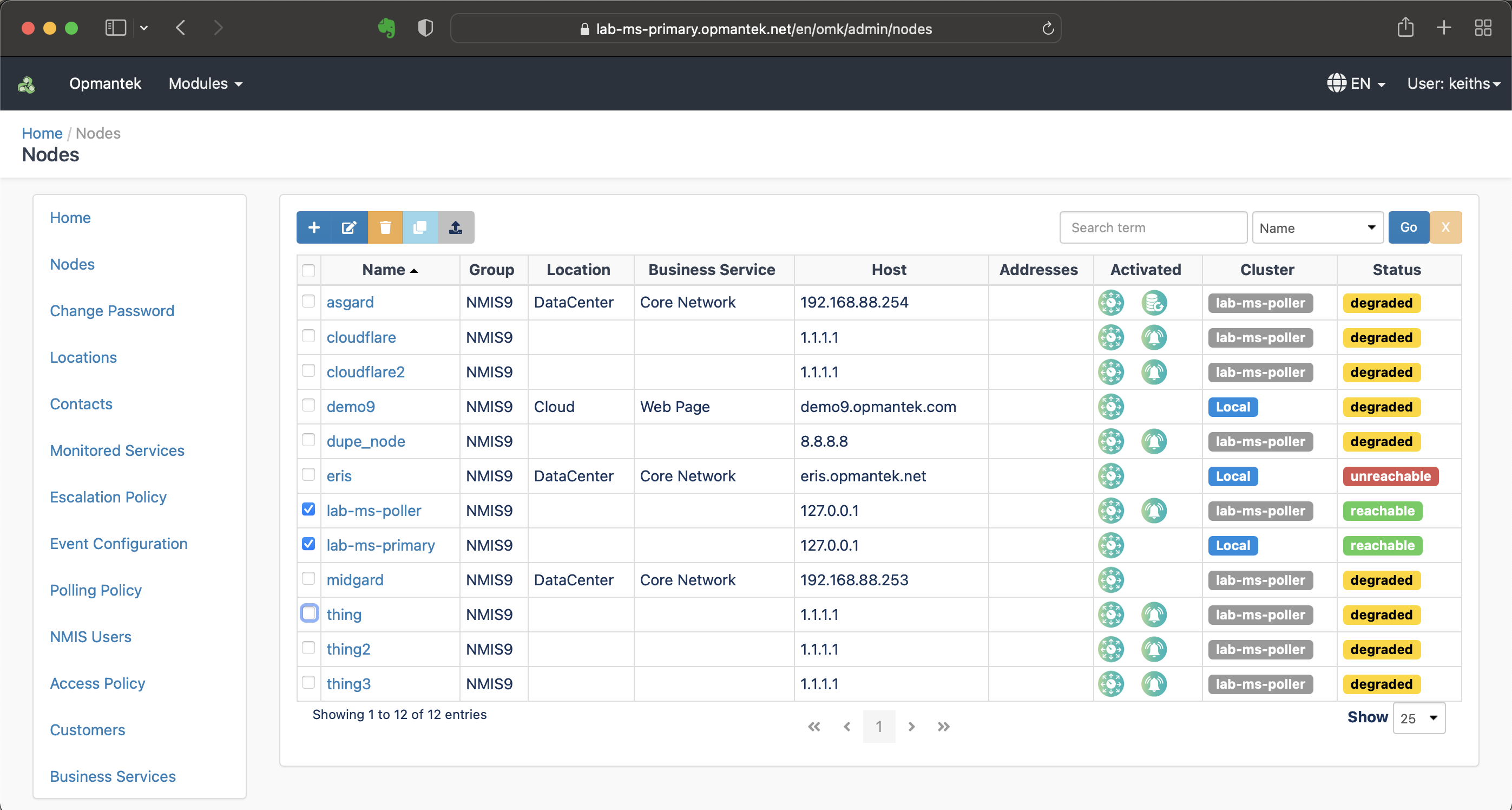

In summary, on the primary access the Node Admin tool, https://server.domain.com/en/omk/admin/nodes, and select the nodes.

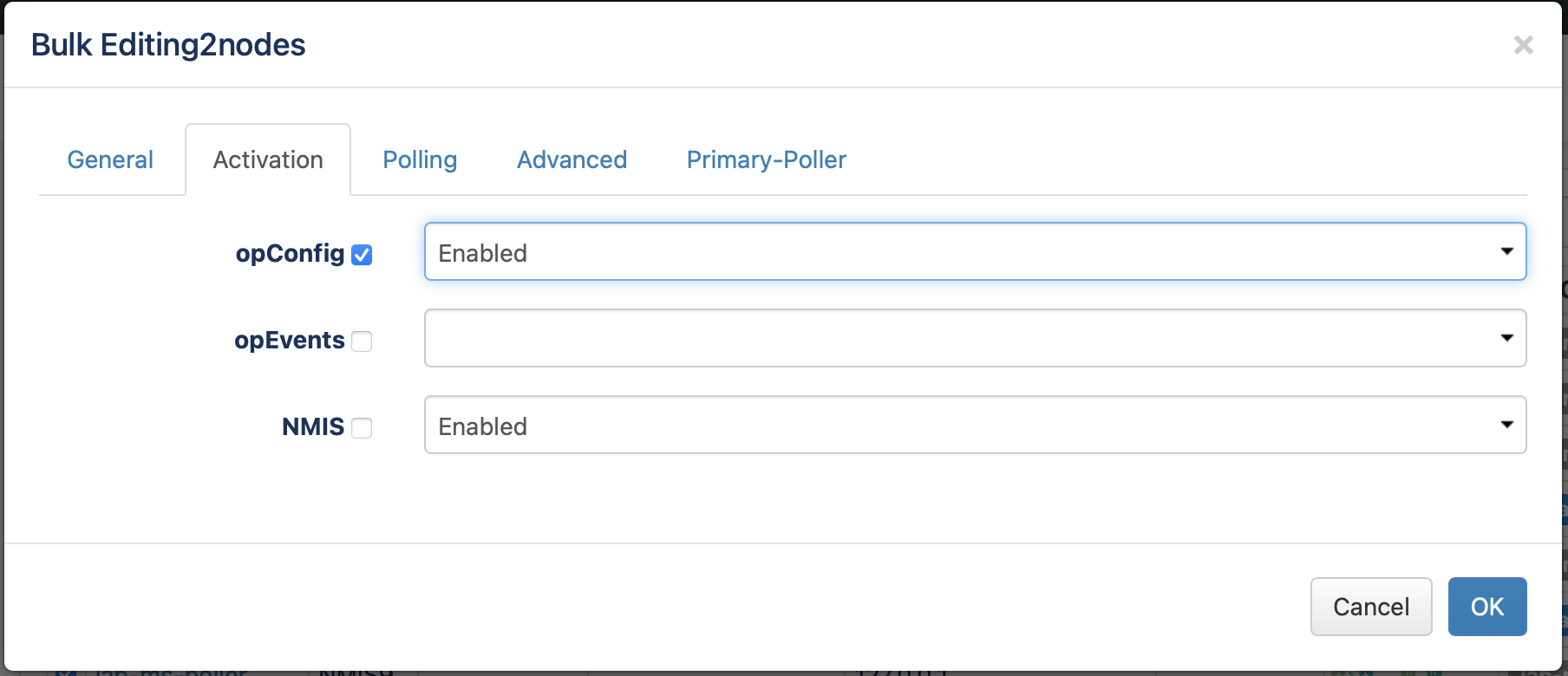

Then activate them for opConfig, and click OK.

This will activate many nodes at once and it will all be sent to the necessary poller.

Automating for many nodes using simple scripts and CLI tools

Scripts are useful when you want things to happen repeatedly, or you have so many things to click on, scripting is faster than clicking.

Get a list of nodes using node_admin.pl

To get a list of nodes is easy, just ask node admin to get you a list.

/usr/local/nmis9/admin/node_admin.pl act=list

You can filter this by a group node nodes by adding group=GROUPNAME

/usr/local/nmis9/admin/node_admin.pl act=list group=GROUPNAME

Using a script to activate nodes for opConfig

The following BASH script will let activate opConfig for all nodes on the primary server, opHA will then synchronise this to all the pollers.

#!/usr/bin/env bash

if [ "$1" == "" ]

then

echo This script will update the opConfig enabled property for all nodes.

echo give me any argument to confirm you would like me to run.

echo e.g. $0 runnow

exit

fi

NODELIST=`/usr/local/nmis9/admin/node_admin.pl act=list`

for NODE in $NODELIST

do

/usr/local/nmis9/admin/node_admin.pl act=set node=$NODE entry.activated.opConfig=1

done

This script could be easily modified to work on some nodes, with a pattern match e.g.

NODELIST=`/usr/local/nmis9/admin/node_admin.pl act=list | grep NODENAMEPATTERN`

Alternatively you could use the group option to limit the list e.g.

NODELIST=`/usr/local/nmis9/admin/node_admin.pl act=list group=GROUPNAME`

To run the script, create a file with the contents, using your favourite editor, we used the filename enable-opconfig-all-nodes.sh, once created, update the permissions so it is executable, or just run by prefixing with bash.

sudo bash enable-opconfig-all-nodes.sh

opConfig Automation with a Primary and Poller Server

Credential Sets Created on all Servers in the Cluster

The first step is to create the credential sets on all servers in the cluster, there are API's for doing this, or you can access the GUI and access opConfig, look for the menu option "System → Edit Credential Sets".

API details here: opConfig Credential Sets API

Configure the Nodes from the Primary

The following handy BASH script will update all the nodes and set the required OS Info and Connection details for opConfig to operate.

This example would set the details for Cisco devices running NXOS 7.0 using SSH.

This command also sets the Node Context Name and URL, so there is a button in the opCharts GUI and NMIS to be able to access opConfig easily.

You should replace YOURCREDENTIALSET with the relevant credential set for these devices and YOURPOLLERNAMEORFQDN with the FQDN or hostname, e.g. lab-poller.opmantek.net

#!/usr/bin/env bash if [ "$1" == "" ] then echo This script will run opConfig discovery all nodes. echo give me any argument to confirm you would like me to run. echo e.g. $0 runnow exit fi NODELIST=`/usr/local/nmis9/admin/node_admin.pl act=list` for NODE in $NODELIST do # multi line command to make it easier to read. /usr/local/nmis9/admin/node_admin.pl act=set node=$NODE \ entry.activated.opConfig=1 \ entry.configuration.os_info.os=NXOS \ entry.configuration.os_info.version=7.0 \ entry.configuration.connection_info.credential_set=YOURCREDENTIALSET \ entry.configuration.connection_info.personality=ios \ entry.configuration.connection_info.transport=SSH \ entry.configuration.node_context_name="View Node Configuration" \ entry.configuration.node_context_url=//YOURPOLLERNAMEORFQDN/omk/opConfig/node_info?o_node=\$node_name done

As the example above you could use a filter on the NODELIST statement to filter the nodes.

Sync node data in the Cluster

With many node changes, the best option is to trigger opHA to sync all the node data to the pollers. You can access the opHA menu, view each poller and click the "Sync all nodes" and sync all the nodes or you can run the following command on the primary.

sudo /usr/local/omk/bin/opha-cli.pl act=sync-all-nodes