| Table of Contents |

|---|

Introduction

To assist with batch node operations, NMIS includes a little script for importing nodes from a CSV file. From version 9.1.1G onwards there are also more fine-grained tools available, which are described on the page titled Node Administration Tools.******

| Info | ||

|---|---|---|

| ||

IMPORTANT: The import_nodes.pl script was updated on 10 Dec 2020 to better handle node activation which was causing problems with nodes. If you are using this tool you should update from the NMIS9 GitHub repository, the links are below. |

...

The bulk import script can be found in /usr/local/nmis9/admin/import_nodes.pl and there is a sample CSV file /usr/local/nmis9/admin/samples/import_nodes_sample.csv.

...

| Code Block |

|---|

0.00 Begin 0.00 Loading the Import Nodes from /usr/local/nmis9/admin/import.csv done in 0.00 0.00 Processing nodes 0.00 Processing newnode UPDATE: node=newnode host=127.0.0.1 group=DataCenter => Successfully updated node newnode. 0.00 Processing newnode end 0.00 Processing import_test2 UPDATE: node=import_test2 host=127.0.0.1 group=Sales => Successfully updated node import_test2. 0.00 Processing import_test2 end 0.00 Processing import_test3 UPDATE: node=import_test3 host=127.0.0.1 group=DataCenter => Successfully updated node import_test3. 0.01 Processing import_test3 end 0.01 Processing import_test1 UPDATE: node=import_test1 host=127.0.0.1 group=Branches => Successfully updated node import_test1. 0.01 Processing import_test1 end 0.01 End processing nodes |

Import nodes from the primary into the pollers (opHA)

We can use the primary to import nodes to the pollers, indicating the cluster_id in the header:

| Code Block |

|---|

name,host,group,role,community,netType,roleType,activated.NMIS,activated.opConfig,cluster_id

import_test1,127.0.0.1,Branches,core,nmisGig8,lan,default,1,1,a515c63a-0d11-4bcb-a402-39a4600aebb9 |

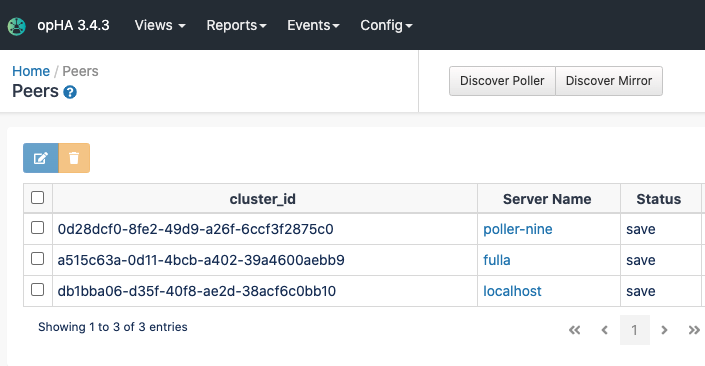

The cluster_id can be checked:

- Using opha-cli tool:

| Code Block |

|---|

/usr/local/omk/bin/opha-cli.pl act=list_peers

cluster_id id server_name status

a515c63a-0d11-4bcb-a402-39a4600aebb9 614f3ea8626660a3e47f4801 poller1 save |

In the opHA GUI:

The nodes will be transferred when the opha cron job runs, it can be called manually:

| Code Block |

|---|

/usr/local/omk/bin/opha-cli act=sync-all-nodes |

More information in the opHA documentation opHA 3 Redundant Node Polling and Centralised Node Management#Managingremotenodes